A processing component (on short called component) represents a standalone application (or module) defined by the following parameters:

- Input description: type of data that the component accept as input source (e.g. image, raster maps, vector maps, sensors, etc.)

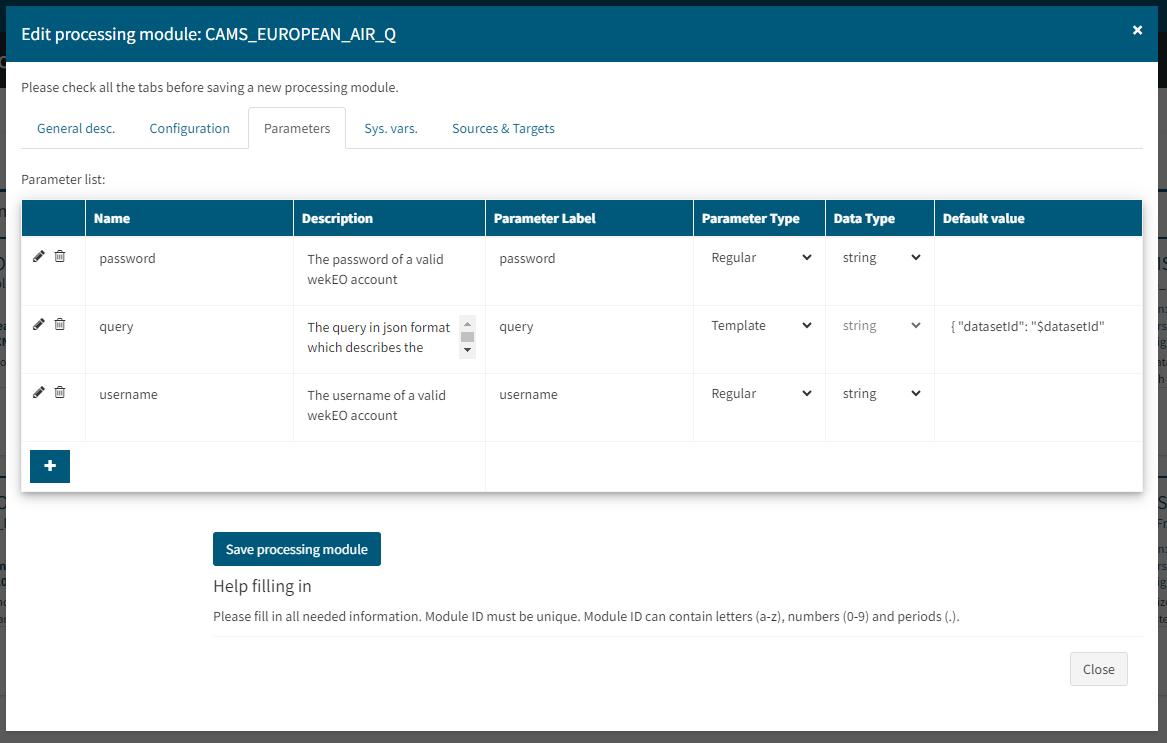

- Processing operation with execution parameters: the operation that the component executes with the list of accepted parameters.

- Output description: type of data provided as processing operation result. Can consist in one or more files.

A component is viewed as a generic execution resource and should permit, through its parameters list, the possibility to define input location (the place where it expects to retrieve input data for consuming) and output location (the place where the processing results are persisted).

Each processing component is described in terms of its expected input, processing module (see the below paragraphs for details about what a processing module is) and output.

There are three kinds of components that could be used in a workflow:

- System components: these are the components that ship with the system and can be used by everyone. Currently, TAO ships with all the components of Orfeo Toolbox 6.4 and of SNAP 6.0.0.

- User components: a user of the platform also has the possibility to upload his own processing algorithms as script in Python or R. These user components can be used only by the owner (private mode) or by everyone (public mode – in which case it will be part of a contributor list). Users who upload components into the system are responsible for the management of their components.

- Contributor components list: all user components loaded into the system and declared for public use will be a part of this list.

The person who upload a component into the system is responsible for providing also all the necessary dependencies. The component is saved into a repository either as an archive or as an installation kit. The repository can be a tree folder into the file system or a database.

The processing modules are heterogeneous regarding their implementation language or operating system. The TAO framework does not target to integrate at once the processing modules from several operating systems (i.e. multiple modules deployed on different operating systems at once). Nevertheless, TAO aims to be as OS-independent as possible and to allow the integration of modules written in different programming languages (such as C/C++, Java or Python).

In an ideal scenario, a processing module may be an independent executable (i.e. not having any additional runtime dependencies). However, this is seldom the case and different modules have different runtime dependencies. Therefore, it is critical that each module has its own appropriate dependencies when the module would execute. Moreover, dependencies of one module may break the execution of another module if they are executed on the same machine, in the same memory space.

With these constraints in mind, the framework is capable of providing to each module its appropriate runtime dependencies, without disrupting the functioning of other modules, by using Docker execution containers. Each container hosts a processing module together with its runtime dependencies. Each container is then isolated from other containers that may execute on the same machine.

Out of the box, TAO ships with predefined Docker images for OTB 6.4.0, SNAP 6.0.0, Python (with most common python scientific libraries) and R. Of course, additional Docker images can be registered if available.