The TAO platform can scale up from one to as many nodes as made available. A node represents a computer resource (a machine) with a defined amount of processing power (number of processors, amount of RAM and disk space), a container for execution of processing components. The platform provides a dedicated administrative interface to manage the processing nodes (even via remote shells).

Currently, the framework deals with already existing nodes (Linux-based with default installation – i.e. no particular configuration) that can be registered with it. There is work in progress of integrating the OpenStack Nova API (used by several cloud providers) to allow the dynamic creation and registration of computing machines/resources). Once done, this will allow the dynamic expansion of resources for achieving computation scalability as needed, without any operator intervention.

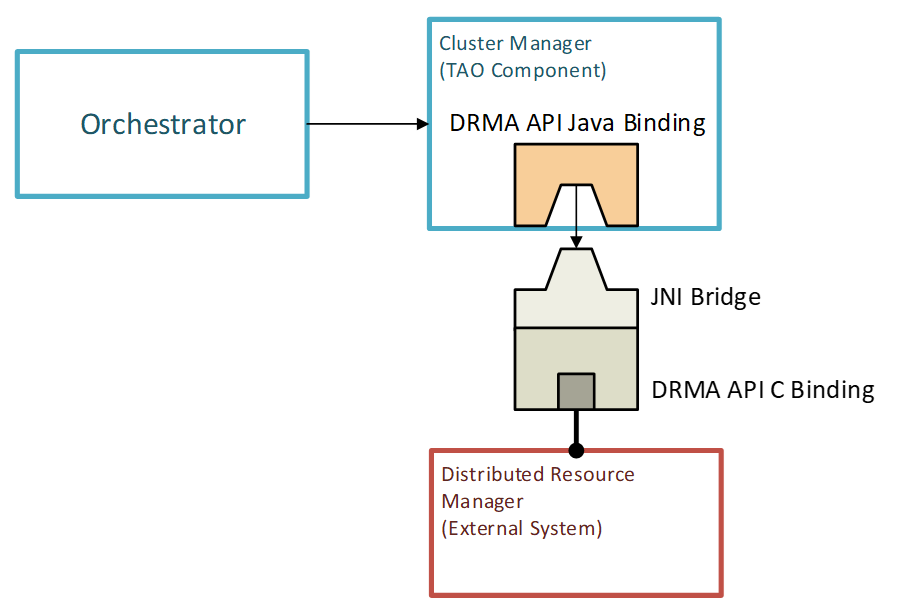

The actual execution of workflows is handled by dedicated software, usually named Cluster Resource Managers. In order to decouple the core of the TAO framework from the actual Cluster Resource Manager software, the latter component has to provide a DRMAA-compliant interface.

The DRMAA (Distributed Resource Management Application API) provides a standardized access to the DRM systems for execution resources. It is focused on job submission, job control, reservation management, and retrieval of jobs and machine monitoring information.

Currently, there are several DRMAA implementations, most of them implemented in the C programming language and for Linux operating systems.

The bridging plugin approach taken by the TAO framework allows to easily swap such implementations, making the framework loosely coupled from the actual DRM system used.

The platform provides two such DRMAA-compliant plugins, namely for SLURM (Simple LinUx Resource Manager) and Torque (the open-source version of PBS/Torque).